ERASMUS+ lectures in Foggia

Lecture-workshops at Conservatorio di musica Umberto Giordano, Italy - facets of electroacoustic music.

This year’s Innovation in Music conference took place at Kristiania College in Oslo Norway, from the 14th to the 16th of June. The abstract that was submitted originally had the title Innovating Inclusive Dance: Integrating Wheelchairs and Sonification in Interactive Performances. However, due to different circumstances, I had to change the focus of the paper somewhat, and the title thereby had to be adapted to Facilitating development of interactive wheelchair sonifications. During this project I have been collaborating with wheelchair dancer Elen Øien from DansiT, in Trondheim.

The work can be related to two different context, one artistic and the other, academic. The artistic context is that of inclusive dance, a movement within contemporary dance which tries to open dance to all sorts of bodies and levels of ability, thereby seeing the dancerly potential in every kind of movement and every type of body. Dancers in wheelchairs and with different levels of abilities can thereby be accepted on equal terms to the sterotypical professional ballet dancers. The academic context is that of the sonification, a field of research that investigates how different forms of data can be represented in sound. Sonification of wheelchair movements has earlier been attempted by a team of researchers by KTH, who investigated the degree to which a set of wheelchair movements could be facilitated by sonification (Almqvist Gref et al. 2016).

The research questions I originally wanted to address with the presented research were the following:

Which wheelchair movements are interesting and relevant in wheelchair dance?

How can interactive sonifications of wheelchair movement repertoire be facilitated so that it demands less effort and practical challenges in the development phase?

How can sonifications be designed so that they are intuitive, distinct and stimulate movement and engagement?

However, it turned out that the third research question could not fully be addressed. Nevertheless, since it reveals some of my intentions with the project I found it useful to invlude it here.

At earlier phases of the project, the plan was to bring the project towards a performance taking place in the early summer of 2024. However, during the project the progress has at several times been halted by different issues, including health and the availability and accessability of spaces. Because of this, it seemed practical for me to have some ways of developing the sonifications without having to meet regularly and trying out how different movements will sound. This resulted in the need for developing a tool to record and play back sensor data that could be synchronized with video playback.

As in several other interactive dance project I have been involved in, I used the X-IO NGIMU sensors to track the movement of the wheelchair. Two were fastened on the spokes of the wheels, while a third one was attached to the transverse bar behind the seat of the chair. The following data streams were transmitted over Open Sound Control (OSC) to a computer running Max:

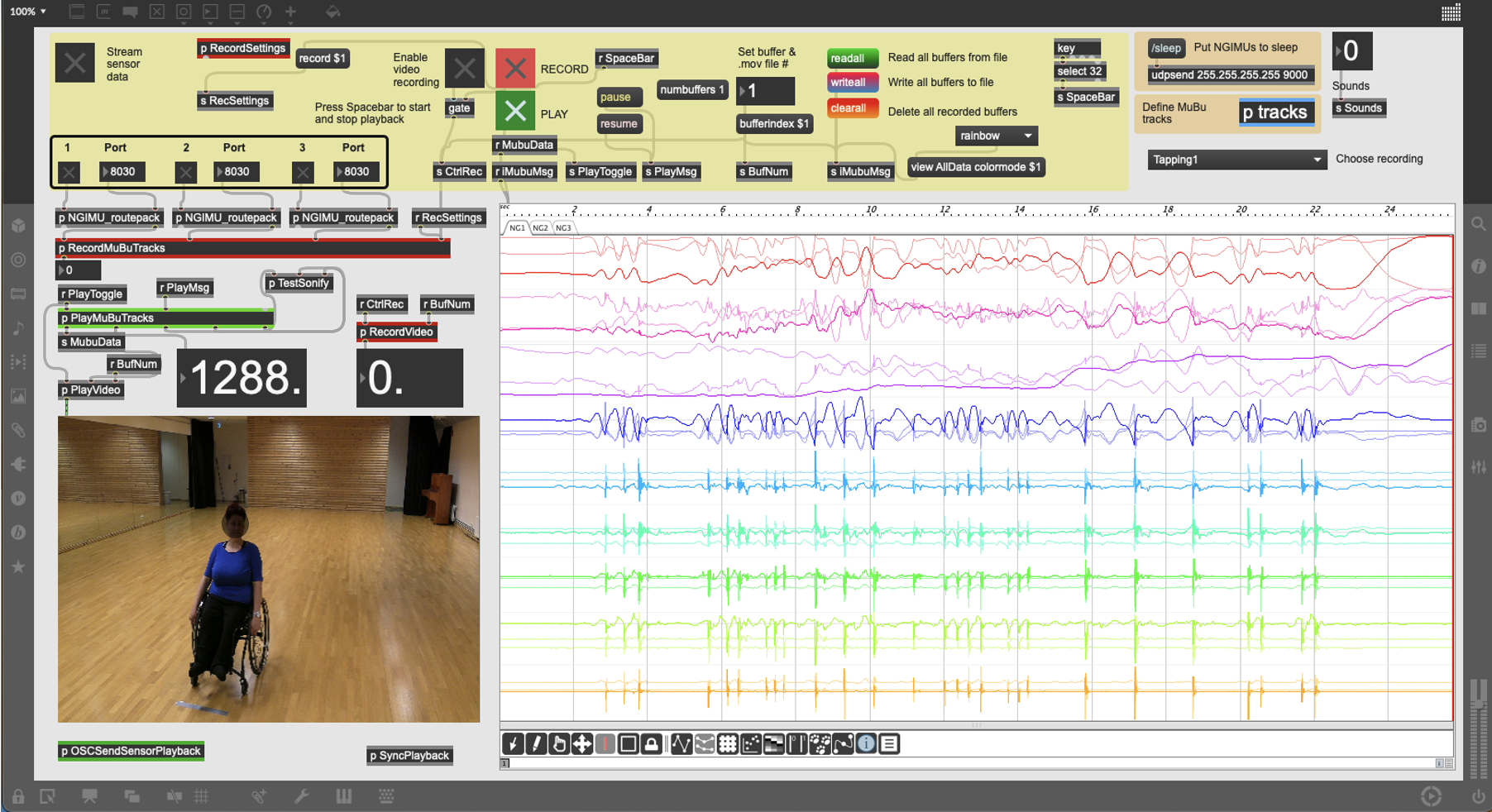

In Max, with the help of the MuBu toolbox, I made a patch that could record these datastreams. The patch could also play back the sensor data along with a synchronized video playback.

SonoWheelchair recording and playback tool

SonoWheelchair recording and playback tool

Based on a previous session I did with Elen in 2019 where I recorded a number of different movements in her repertoire, I made video and sensor data recordings May 24th. 15 different movements were recorded in 2-4 versions, thus resulting in a quite rich dataset. These movements included

In addition to these specific movements and variants of these, we also recorded two short free improvised sessions. These sessions indeed confirmed that a number of these movements were actually applied in practice.

The recordings were subsequently synchronized to the video that was recorded using a “tapping” movement of the front wheels as a variant of the “clapper” used in shooting film. The video and sensor recordings were then synchronized manually with this as a reference.

The next phase was designing the sonifications. Here, I wanted to make a multi-layered sonification that was based on more traditional musical techniques compared to what I usually have done earlier. This resulted in six layers sonification mapped to different features:

All mappings were implemented in Csound code wrapped as a Cabbage VST plugin running inside of Ableton Live.

Observing the captured data and listening/viewing the resulting sonifications along with the video supported the intuition that the nine data streams from the three NGIMU sensors from wheels and the back of the seat could capture the movements in Elen’s movement repertoire reliably. From the process of making the sonifications and the observations of the result, it could also be claimed that the tool greatly facilitates the development of the sonifications in that they allowed for testing out ideas without necessarily having to try them out in practice. Observing the video it also seems that the sonifications are distinctly related to different movements in the repertoire. However, it remains to be seen through practical testing and evaluation whether the sonifications are intuitively grasped and whether they stimulate movement and engagement.

This paper presentation will be elaborated and extended into a chapter in a proceedings published by Routledge.

Almqvist Gref, A., Elblaus, L., & Falkenberg Hansen, K. (2016). Sonification as Catalyst in Training Manual Wheelchair Operation for Sports and Everyday Life Proceedings of Sound and Music Computing Conference, Hamburg. http://urn.kb.se/resolve?urn=urn:nbn:se:kth:diva-192377